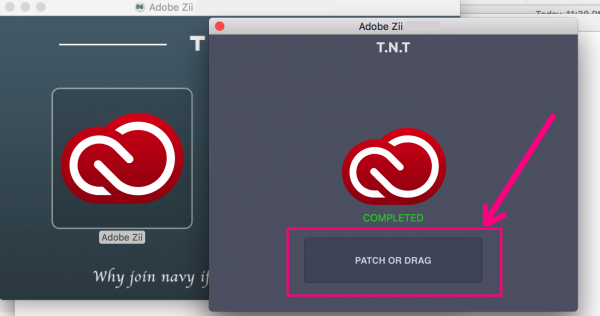

Power On More Info - Fireworks is the nation’s largest distributor of Consumer Fireworks. For over 95 years, TNT has set the standar. Transformer is a type of self-attention-based neural networks originally applied for NLP tasks. Recently, pure transformer-based models are proposed to solve computer vision problems. These visual transformers usually view an image as a sequence of patches while they ignore the intrinsic structure information inside each patch. In this paper, we propose a novel Transformer-iN-Transformer (TNT. Adobe Zii Patcher software is created by the TNT and with this, you may patch all the newest 2020 variations of Adobe CC immediately. It’s the solely software for Mac which you should use to activate any of the Adobe Product. You don’t have to comply with extra steps to make use of it, that is fairly easy and simple to make use of the software.

Abstract: Transformer is a new kind of neural architecture which encodes the input dataas powerful features via the attention mechanism. Basically, the visualtransformers first divide the input images into several local patches and thencalculate both representations and their relationship. Since natural images areof high complexity with abundant detail and color information, the granularityof the patch dividing is not fine enough for excavating features of objects indifferent scales and locations. In this paper, we point out that the attentioninside these local patches are also essential for building visual transformerswith high performance and we explore a new architecture, namely, Transformer iNTransformer (TNT). Specifically, we regard the local patches (e.g.,16$times$16) as 'visual sentences' and present to further divide them intosmaller patches (e.g., 4$times$4) as 'visual words'. The attention of eachword will be calculated with other words in the given visual sentence withnegligible computational costs. Features of both words and sentences will beaggregated to enhance the representation ability. Experiments on severalbenchmarks demonstrate the effectiveness of the proposed TNT architecture,e.g., we achieve an $81.5%$ top-1 accuracy on the ImageNet, which is about$1.7%$ higher than that of the state-of-the-art visual transformer with similarcomputational cost. The PyTorch code is available atthis https URL, and theMindSpore code is atthis https URL.

Submission history

From: Kai Han [view email][v1] Sat, 27 Feb 2021 03:12:16 UTC (4,472 KB)

Patcher By Tnt Online

[v2]Mon, 5 Jul 2021 03:31:05 UTC (7,456 KB)Full-text links:

Download:

Current browse context:References & Citations

DBLP - CS Bibliography

Jianyuan Guo

Chunjing Xu

Yunhe Wang

Patcher By Tnt App

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs and how to get involved.

Comments are closed.